Exploring Micron’s Revolutionary HBM3E Chip

You are about to delve into the world of high bandwidth memory and how Micron’s latest HBM3E chip is changing the game in the realm of AI. This innovative technology not only benefits memory-chip makers, like Micron and Samsung, but also plays a crucial role in advancing AI capabilities. Let’s take a closer look at what makes this groundbreaking chip so significant.

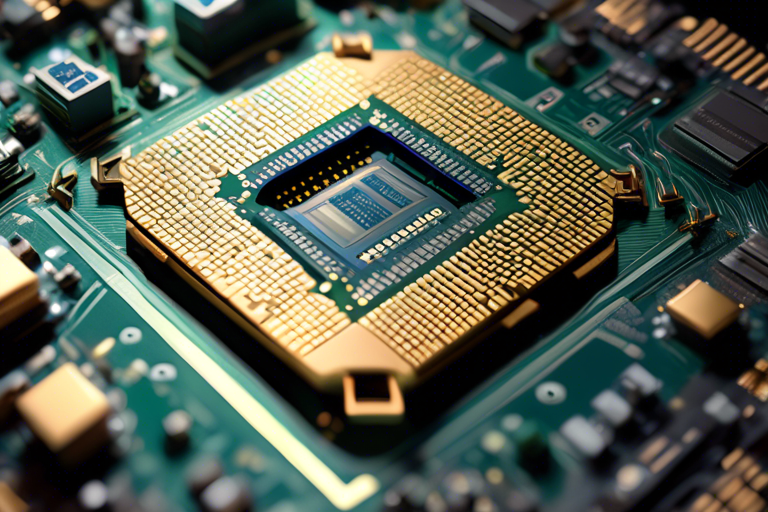

A Closer Look at HBM3E Chip

Micron’s HBM3E chip is a high bandwidth memory chip that is specifically designed to power AI workloads. Its revolutionary design allows for high-speed data transfers and increased capacity, addressing key bottlenecks in the development of AI technologies. Here are some key features of Micron’s HBM3E chip:

- The chip is smaller and thinner than a penny, making it compact and efficient for various applications.

- It features eight layers of DRAM or memory stacked on top of each other, with each layer offering 3 GB of memory.

- Collectively, the chip provides a total of 24 GB of memory, enabling high-speed data processing for AI workloads.

Significance of HBM3E in AI

In the context of AI, Micron’s HBM3E chip plays a crucial role in overcoming the memory wall, a common challenge in training large language models (LLMs) like GPT. Here are some reasons why Micron’s HBM3E chip is important in the AI landscape:

- It enables faster and more efficient processing of large volumes of data, essential for training AI models effectively.

- With the increasing complexity of AI workloads and the introduction of high-performance AI chips like Nvidia’s Blackwell, the demand for high bandwidth memory solutions has also risen.

- Micron’s HBM3E chip offers a solution to the growing memory demands in AI applications, ensuring optimal performance and efficiency.

Advancements in Memory Capacity

This year, Micron introduced a higher capacity chip that features 12 layers of DRAM, providing a total of 36 GB of memory. Despite the increased memory capacity, the chip maintains a compact and streamlined design, showcasing Micron’s commitment to innovation in the field of memory-chip technology.

When compared side by side, the HBM3E chip and the higher capacity variant appear identical. However, the thinner layers in the new chip allow for more memory to be packed into the same physical space, offering enhanced performance and efficiency for AI workloads.

Hot Take: Embracing the Future of AI with Micron’s HBM3E Chip

You are at the forefront of technological innovation with Micron’s HBM3E chip, paving the way for accelerated AI advancements. As the demand for high bandwidth memory solutions continues to grow in the AI industry, Micron’s latest offerings set a new standard for efficiency and performance. Embrace the future of AI with Micron’s groundbreaking HBM3E chip and unlock the full potential of AI technologies.

By

By

By

By

By

By

By

By

By

By