Revolutionizing AI Application Deployment with NVIDIA NIMs

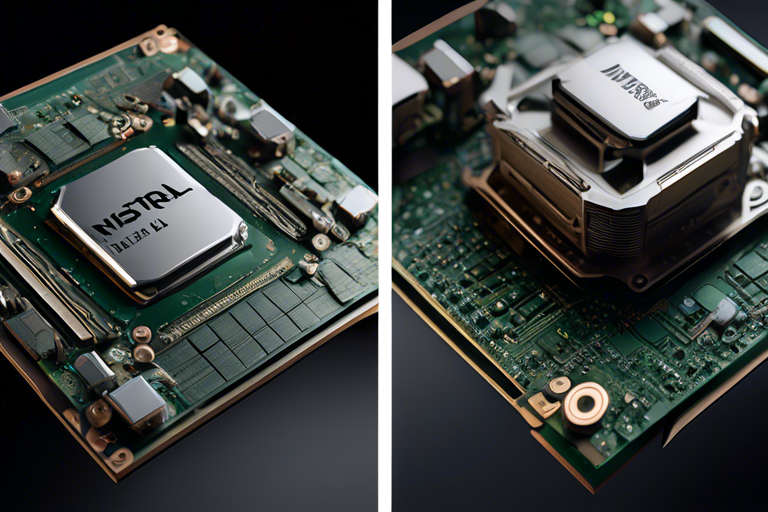

Enterprise organizations are increasingly turning to large language models (LLMs) to enhance their AI applications. NVIDIA, a key player in the AI space, has recently unveiled new NVIDIA NIMs (Neural Interface Modules) designed to streamline AI project deployments and optimize performance and scalability.

Enhanced Performance with New NVIDIA NIMs

- Foundation models are essential but often need customization for optimal performance.

- NVIDIA’s NIMs for Mistral and Mixtral models offer prebuilt microservices for seamless integration.

- Continuous updates ensure peak performance and access to the latest AI advancements.

Introducing Mistral 7B NIM

The Mistral 7B Instruct model targets tasks like text generation and chatbots, delivering a 2.3x performance boost.

Meet Mixtral-8x7B and Mixtral-8x22B NIMs

These models use a Mixture of Experts (MoE) architecture for rapid and cost-effective inference.

Accelerating Deployment with NVIDIA NIM

- NIM enhances AI application deployment, improves inference efficiency, and lowers operational costs.

Key Advantages of NIM

- High throughput and low latency for scalable AI inference.

- Seamless integration and optimized performance on NVIDIA-accelerated infrastructure.

- Robust control and security for AI applications and data.

Future of AI Inference: NVIDIA NIMs

NVIDIA NIM is a game-changer in the world of AI inference, enabling enterprises to deploy AI applications efficiently and stay ahead in innovation.

The future entails creating a network of NVIDIA NIMs for seamless task adaptation, shaping the future of technology across industries.

Hot Take: Embracing NVIDIA NIMs for AI Excellence

As an avid follower of the latest in AI technology, integrating NVIDIA NIMs into your workflow can revolutionize your AI projects, ensuring optimized performance and scalability for future innovations.

By

By

By

By

By

By

By

By