The Launch of OpenAI’s Preparedness Team

OpenAI, the parent company of ChatGPT, has introduced a Preparedness team to evaluate the risks associated with artificial intelligence (AI) models. While AI has the potential to bring positive changes to humanity, it also presents various risks that governments are concerned about managing.

Functions of OpenAI’s Preparedness Team

In a blog post, OpenAI announced the establishment of the Preparedness team. Led by Aleksander Madry, director of the Massachusetts Institute of Technology’s Center for Deployable Machine Learning, the team will focus on addressing risks related to frontier AI. These risks include individualized persuasion, cybersecurity, chemical, biological, radiological, and nuclear threats (CBRN), and autonomous replication and adaptation (ARA).

Risks of Misinformation and Propagation

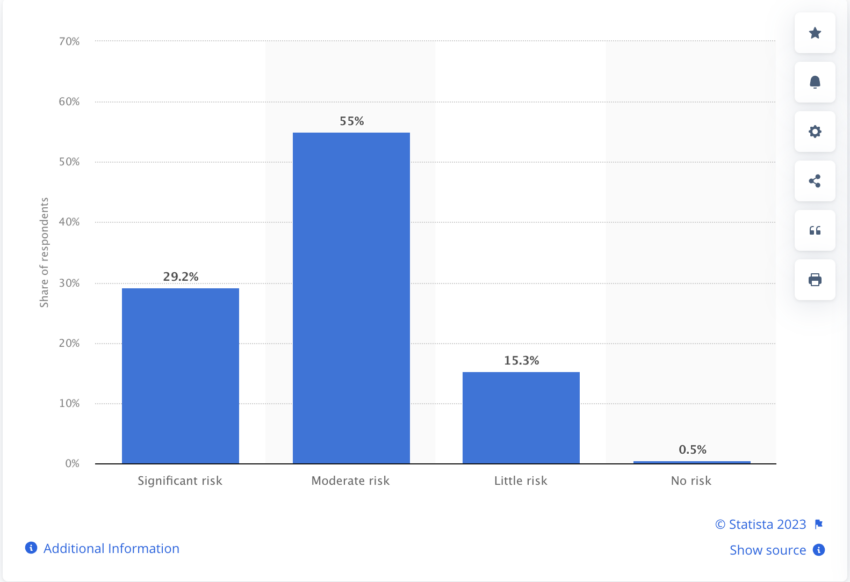

There is also a risk of misinformation and the spread of rumors through AI. A survey revealed that many professionals worldwide believe that generative AI poses a risk to brand safety and misinformation. This highlights the need for effective risk management in AI development.

The Definition of Frontier AI

The UK government defines frontier AI as highly capable general-purpose AI models that can perform a wide range of tasks and surpass the abilities of current advanced models. OpenAI’s Preparedness team will specifically address the risks associated with catastrophic events related to frontier AI, contributing to the upcoming UK global AI summit.

Commitment to Safety and Transparency

In July, leading AI organizations such as OpenAI, Meta, and Google made commitments to prioritize safety and transparency in AI development during a White House event. These initiatives demonstrate the industry’s dedication to responsible AI practices.

UK Prime Minister’s Concerns

Recently, UK Prime Minister Rishi Sunak expressed his hesitation to rush into regulating artificial intelligence. One of his concerns is the potential loss of control over AI by humanity. This highlights the need for careful consideration and responsible governance when dealing with AI technology.

Hot Take: The Importance of Risk Assessment in AI Development

As AI continues to advance, it is crucial to assess and manage the risks associated with its development. OpenAI’s establishment of a Preparedness team demonstrates their commitment to addressing potential risks and ensuring the responsible deployment of AI technologies. By focusing on frontier AI risks and collaborating with global stakeholders, OpenAI aims to contribute to international efforts in mitigating the negative impacts of AI. This proactive approach sets a precedent for other organizations in prioritizing risk assessment and management in the field of artificial intelligence.

By

By

By

By

By

By

By

By