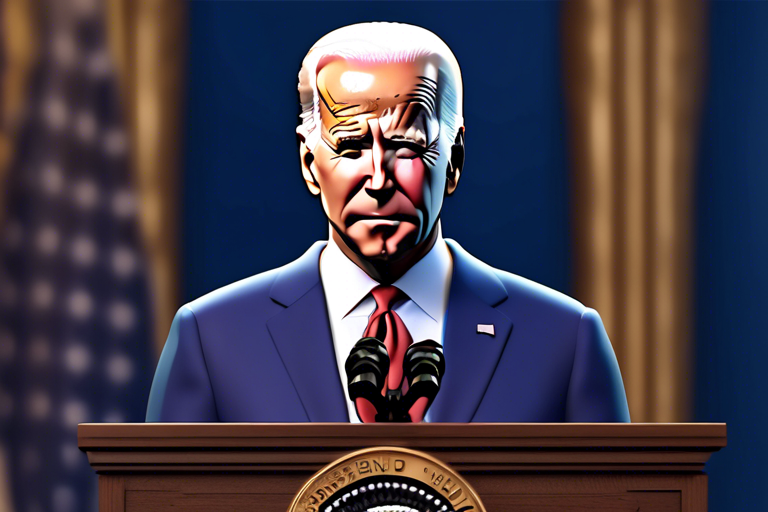

US Senators Introduce COPIED Act to Combat Deepfakes

A bipartisan group of US senators has introduced the Content Origin Protection and Integrity from Edited and Deepfake Media Act (COPIED) to address the growing threat of artificial intelligence (AI) deepfakes. Led by Senators Maria Cantwell, Marsha Blackburn, and Martin Heinrich, this proposed legislation aims to establish consistent strategies for embedding watermarking in AI-generated content to safeguard digital authenticity.

Deepfake Technology and the Need for Transparency

The COPIED Act mandates artificial intelligence service providers, including industry giants like OpenAI, to incorporate machine-readable origin details into their output, signaling a significant shift in the crypto and digital landscapes. According to Cantwell, this measure is crucial for ensuring transparency and protecting creators’ rights as AI continues to evolve and dominate various sectors.

- By requiring watermarking of AI-generated content, COPIED Act promotes transparency and authenticity in the digital realm.

- Industry leaders like OpenAI will have to comply with the legislation to ensure data protection and prevent misuse of content.

FTC Oversight and Compliance

The enforcement of the COPIED Act falls under the jurisdiction of the Federal Trade Commission (FTC), responsible for monitoring adherence to the regulations and addressing any violations deemed deceptive or unfair. This development comes at a time when debates surrounding the ethical implications of AI technology are gaining prominence.

- The FTC will oversee compliance with the COPIED Act, ensuring that AI service providers adhere to the new guidelines.

- Concerns over data privacy and security have prompted stricter regulations, with companies like Microsoft taking proactive measures to address AI-related risks.

Industry Perspectives and Future Outlook

While opinions within the digital and creative industries vary regarding the COPIED Act, many stakeholders acknowledge the necessity of such legislation to combat deepfake threats. Michael Marcotte, founder of the National Cybersecurity Center (NCC), has criticized tech companies like Google for failing to effectively combat deepfake fraud.

- Industry experts highlight the importance of proactive measures to prevent deepfake exploitation and safeguard digital content authenticity.

- The COPIED Act reflects a growing awareness of the risks posed by AI technology and the need for comprehensive regulations to mitigate potential threats.

Hot Take: Securing Digital Authenticity in the Age of Deepfakes

The introduction of the COPIED Act represents a significant step towards addressing the challenges posed by AI deepfakes and protecting digital integrity. By establishing clear guidelines for watermarking AI-generated content, this legislation aims to enhance transparency and accountability in the digital landscape, ensuring that creators retain ownership of their work in an era dominated by artificial intelligence.

By

By

By

By

By

By

By

By

By

By